Extracting data from a thousand websites is one challenge; turning it into a single, clean, analyzable dataset is another. That’s where most data projects quietly fall apart, when pipelines break silently, quality degrades, and no one trusts the output. The journey from messy HTML to analysis-ready intelligence is where pipelines either become missed forecasts, broken dashboards, stalled ML models.

The difference comes down to moving from fragile, one-off scripts to something more like an industrialized process, a system built not just to collect, but to clean, govern, and deliver data reliably, day after day. That reliability depends on five tightly connected layers, each solving a different scaling failure.

From working with large-scale data programs, one pattern is clear. Scalable web data extraction rests on five tightly connected pillars.

The Five Layers of an Industrial-Strength Data Pipeline

A future-proof pipeline is a connected system where failures in one layer don’t cascade into outages or data loss. For data leaders, this integrated approach is what reduces organizational complexity and ensures data-driven products are powered by accurate, timely information.

1. Design & Architecture: The Foundation at Scale

Before gathering any data, it’s essential to establish a strong foundation for exponential growth.

- Dynamic Infrastructure: Traffic spikes, retries, and failures are handled automatically, so extraction doesn’t stall when volume increases. Under the hood, this is powered by Kubernetes, automatically scales to manage traffic surges from 100 to 100,000 requests without any manual effort.

- Decoupled Components: We utilize a microservices architecture that separates crawling, parsing, storage, and delivery, minimizing the risk of cascading failures.

- API-First Design: Each component features clear APIs, ensuring easy monitoring and enabling smooth integration of structured data into your existing business intelligence tools and data warehouses.

Even the most scalable architecture falls short if it cannot consistently access data. The next challenge is to ensure that this architecture can reliably access data from the modern web, highlighting the importance of intelligent crawling.

2. Intelligent Crawling: Beyond Basic HTTP Requests

Today’s web environments require more than just basic HTTP requests. This layer guarantees reliable access to data, even from complex and secure sources.

- AI-Driven Data Extraction: Large Language Models (LLMs) analyze content for meaning rather than just its HTML layout, allowing for precise data extraction from unstructured text and dynamic content. They augment traditional methods rather than replacing them.

- Smart Crawling Agents: These systems adapt to specific website patterns, modifying their crawling techniques to imitate human behavior and avoid detection by anti-bot systems.

- Intelligent Content Scheduling: This approach prioritizes valuable sources and adjusts refresh rates based on data change frequency, maximizing resource efficiency and minimizing costs.

Once reliable access is secured, the focus shifts from collection to refinement. The raw data must now be transformed into a clean, unified, and trustworthy ready-to-use dataset.

3. Normalization & Comprehensive QA: From Raw to Reliable Data

This is where unrefined, complex data is transformed into a clean, usable asset. It directly tackles the essential challenges of data quality and scalability for enterprises.

- Automated Schema Mapping: Our AI technology standardizes different data labels (like “price,” “cost,” and “amount”) into a consistent format.

- Human-in-the-Loop Validation: Ensures that when automation isn’t confident, human experts step in to review and correct the data. Their input is used to improve the system, reducing similar issues in the future.

- Multi-Layer Quality Assurance: We implement various automated checks throughout the pipeline to ensure data accuracy and reliability. These checks confirm data is current, complete, and consistent, identifying unusual patterns that may signal errors, thereby maintaining trustworthy data as sources and scale change.

- Entity Resolution Hub: This is vital for investment and market intelligence. It uses fuzzy matching and machine learning to consolidate variations of a name, such as “Apple Inc.,” “Apple,” and “AAPL”, into a single entity, ensuring your analytics remain precise.

At an enterprise level, quality extends beyond accuracy; it also encompasses responsibility. Therefore, effective governance is not just an add-on, but a crucial element of the entire process.

4. Data Governance: Integrate Compliance into the Pipeline

For global companies, a pipeline must be designed to be legally defensible. This principle directly addresses the compliance and risk management duties of today’s data leaders.

- Automated PII Redaction: Our system identifies and manages personal data during processing, which includes any information that can identify an individual, like names, emails, and addresses found online.

For example, Public job postings, forum discussions, or business listings may include contact details that can be detected and removed to keep downstream datasets suitable for business use.

By applying these controls within the pipeline, rather than after data delivery, organizations can better support alignment with privacy regulations such as GDPR and CCPA while continuing to use web data responsibly for analytics and decision-making.

- Audit Trails & Lineage: We provide a comprehensive log that tracks data from its origin to its use. This is crucial for internal audits and for demonstrating compliance with regulatory bodies.

- Policy Enforcement: Our automated system adheres to robots.txt, implements rate limiting, and enforces terms of service, safeguarding your company from legal risks and ensuring ethical data collection practices.

With a well-governed, high-quality data stream in place, this final pillar guarantees that your valuable data delivers immediate business results.

5. Data Integration: Creating Business Value in Essential Areas

The true measure of a pipeline lies in its ability to integrate smoothly and support decision-making. The final element ensures that data adds value rather than creating confusion.

- Model-Ready Outputs: Data is provided in a pre-processed format, making it ready for immediate use in machine learning models and analytics platforms.

- Real-Time APIs & BI Layer: Quick access enables live dashboards and applications, while curated datasets are tailored for tools like Tableau and Power BI.

- Cloud Data Warehouse Integration: Direct connections to platforms such as Snowflake and Databricks allow data to flow seamlessly into your central repository, primed for analysis.

Key Elements for a Self-Sustaining Data System

Websites are constantly changing, and legal requirements are always evolving. To ensure a production-grade system remains effective, it must be self-sustaining. This includes automated change detection, self-healing capabilities, and ongoing compliance monitoring. Without these features, your system could become a high-maintenance burden that hinders your data strategy.

This requires:

- Automated Change Detection & Alerting: This enables quick detection of any changes to the website layout that may disrupt data extraction.

- Self-Healing Extraction Logic: This automatically adapts to changes without requiring manual input from engineers.

- Continuous Compliance Monitoring: This ensures that your data collection practices adapt automatically to new regulations.

- Performance & Cost Optimization: The aim of this is to make sure that the pipeline functions effectively as your data requirements grow.

Without these capabilities, data teams spend more time fixing pipelines than using data.

Forage AI: Your Fully Managed Data Extraction Solution

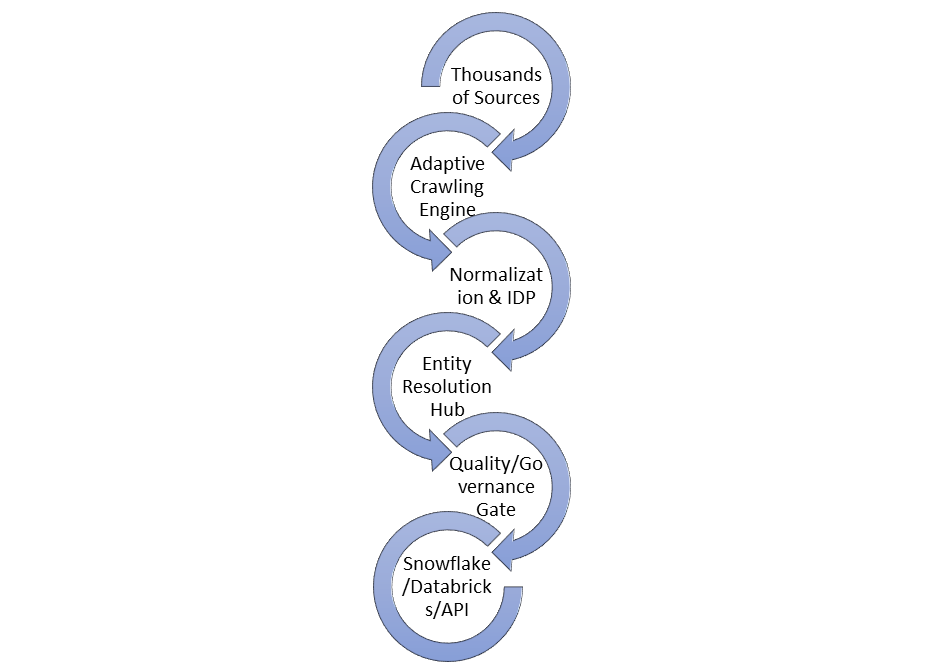

These five pillars establish a seamless and cohesive workflow in our end-to-end pipeline architecture, designed to meet the needs of enterprises that prioritize reliability, compliance, and scalability

- Thousands of data sources: The process starts by gathering data from thousands of sources, such as websites, platforms, and public data endpoints.

- Adaptive Crawling Engine: Instead of static scripts, an adaptive engine is used that automatically responds to changes in site structure, layout, or access patterns, ensuring stable data collection as sources evolve.

- Normalization & Intelligent Document Processing (IDP): Raw, unstructured data is then cleaned, structured, and standardized into consistent formats, making it usable for analytics and AI models.

- Entity Resolution Hub: Different references to the same entity (e.g., company names, products) are identified and unified here, preventing duplication and ensuring a single, accurate view across all datasets.

- Quality & Governance Gate: Before delivery, data undergoes validation checks, quality controls, and compliance rule enforcement, embedding governance directly into the pipeline to meet enterprise and regulatory standards.

- Enterprise-Ready Delivery: Finally, the refined data is delivered via integrated outputs like Snowflake, Databricks, or APIs, allowing seamless integration into existing workflows, analytics platforms, and decision-making processes.

Built for Enterprise Data Needs

This architecture reflects Forage AI’s core principles of scalability, intelligence, quality, and compliance, guiding every stage from data collection to consumption. By managing the entire pipeline end-to-end, Forage AI enables organizations to scale their data operations confidently, without the operational burden of maintaining scripts, tools, and infrastructure.

Conclusion: Building a Strong, Reliable Data Foundation

Transitioning from individual scripts to a robust data pipeline turns web data from a one-off research task into a strategic business asset. This shift allows teams to move away from constant infrastructure maintenance and rely on a dependable system that delivers ready-to-use data for decision-making.

Forage AI addresses this challenge by offering a fully managed data pipeline within a single, governed environment. By bringing together adaptive crawling to handle website changes, entity resolution to keep data clean and consistent, and compliant data delivery to ensure safe usage, the complexity of managing web data is significantly reduced. This approach enables organizations to scale data operations without increasing headcount, allowing teams to focus on insights rather than infrastructure.

Instead of relying on fragile scripts, organizations can take a more sustainable, long-term approach to data operations. Working with the right partner helps build pipelines that are reliable, compliant, and designed to evolve as data needs grow.

Connect with Forage AI experts to explore how a reliable, well-governed data pipeline can support your goals, without adding operational complexity.